2021 IEEE Congress on Evolutionary Computation (CEC 2021)

June 28-July 1, 2021 in Kraków, Poland

http://iao.hfuu.edu.cn/bocia21

The Special Session on Benchmarking of Computational Intelligence Algorithms (BOCIA), as part of the 2021 IEEE Congress on Evolutionary Computation (CEC 2021), took place on June 30, 2021 at 10am. Here you can download the BOCIA Special Session Call for Papers (CfP) in PDF format and here as plain text file.

Computational Intelligence (CI), including Evolutionary Computation, Optimization, Machine Learning, and Artificial Intelligence, is a huge and expanding field which is rapidly gaining importance, attracting more and more interests from both academia and industry. It includes a wide and ever-growing variety of optimization and machine learning algorithms, which, in turn, are applied to an even wider and faster growing range of different problem domains. For all of these domains and application scenarios, we want to pick the best algorithms. Actually, we want to do more, we want to improve upon the best algorithm. This requires a deep understanding of the problem at hand, the performance of the algorithms we have for that problem, the features that make instances of the problem hard for these algorithms, and the parameter settings for which the algorithms perform the best. Such knowledge can only be obtained empirically, by collecting data from experiments, by analyzing this data statistically, and by mining new information from it. Benchmarking is the engine driving research in the fields of Computational Intelligence for decades, while its potential has not been fully explored.

The goal of this special session was to solicit original works on the research in benchmarking: Works which contribute to the domain of benchmarking of algorithms from all fields of Computational Intelligence, by adding new theoretical or practical knowledge. Papers which only apply benchmarking are not in the scope of the special session.

This special session was technically supported by the IEEE CIS Task Force on Benchmarking.

Topics of Interest

This special session brought together experts on benchmarking of Evolutionary Computation, Machine Learning, Optimization, and Artificial Intelligence. It provides a common forum for them to exchange findings, to explore new paradigms for performance comparison, and to discuss issues such as

- mining of higher-level information from experimental results

- modelling and visualization of algorithm behaviors and performance

- statistics for performance comparison (robust statistics, PCA, ANOVA, statistical tests, ROC, …)

- evaluation of real-world goals such as robustness, reliability, and implementation issues

- theoretical results for algorithm performance comparison

- comparison of theoretical and empirical results

- new benchmark problems

- automatic algorithm configuration and selection

- the comparison of algorithms in "non-traditional" scenarios such as multi- or many-objective domains, parallel implementations, e.g., using GGPUs, MPI, CUDA, clusters, or running in clouds, large-scale problems or problems where objective function evaluations are costly, dynamic problems or where the objective functions involve randomized simulations or noise, deep learning, big data

- comparative surveys with new ideas on dos and don'ts, i.e., best and worst practices, for algorithm performance comparison; tools for experiment execution, result collection, and algorithm comparison

All accepted papers in this session were included in the Proceedings of the 2021 IEEE Congress on Evolutionary Computation published by IEEE Press and indexed by EI.

Program

- Jakub Kudela. Novel Zigzag-based Benchmark Functions for Bound Constrained Single Objective Optimization.

slides@iao /

slides@amazon /

video@iao /

video@amazon

The development and comparison of new optimization methods in general, and evolutionary algorithms in particular, rely heavily on benchmarking. In this paper, the construction of novel zigzag-based benchmark functions for bound constrained single objective optimization is presented. The new benchmark functions are non-differentiable, highly multimodal, and have a built-in parameter that controls the complexity of the function. To investigate the properties of the new benchmark functions two of the best algorithms from the CEC20 Competition on Single Objective Bound Constrained Optimization, as well as one standard evolutionary algorithm, were utilized in a computational study. The results of the study suggest that the new benchmark functions are very well suited for algorithmic comparison.

- Rafal Biedrzycki. Comparison with State-of-the-Art: Traps and Pitfalls.

slides@iao /

slides@amazon /

video@iao /

video@amazon

When a new metaheuristic is proposed, its results are compared with the results of the state-of-the-art methods. The results of that comparison are the outcome of algorithms' implementations, but the origin, names, and versions of the implementations are usually not revealed. Instead, only papers that introduced state-of-the-art are cited. That approach is generally wrong because algorithms are usually described in articles in a way that explains the idea that is hidden behind them but omits the technical details. Therefore, developers have to fill in these details, and they might do so in different ways. The paper shows that even implementations made by one author who is the creator of an algorithm give results which differ considerably from one another. Therefore, for the comparison purpose, the best possible implementation should be identified and used. To illustrate how details that are hidden in the code of implementations influence the quality of the results, sources of quality differences are tracked down for selected implementations. It was found that sources of the differences are hidden in auxiliary code and also stem from implementing a different version of the algorithm which undergoes development. These findings imply best practice recommendations for researchers, implementation developers, and authors of the algorithms.

- Mustafa Misir. Benchmark Set Reduction for Cheap Empirical Algorithmic Studies.

slides@iao /

slides@amazon /

video@iao /

video@amazon

The present paper introduces a benchmark set reduction strategy that can degrade the experimental evaluation cost for the algorithmic studies. Algorithm design is an iterative development process within in a test-revise loop. Starting a devised algorithm's initial version, it needs to be tested for revealing its skills and drawbacks. Its shortcomings lead the designers to modify the algorithm. Then, the modified algorithm is again tested. This two-step pipeline is repeated until the algorithm meets the expectations such as delivering the state-of-the-art results on a set of benchmarks for a specific problem. That recurring testing step can be a burden for whom with limited computational resources. The mentioned computational cost can mainly occur due either high per instance runtime cost or having a large benchmark set. This study focuses on the cases when a target algorithm needs to be assessed across large benchmark sets. The idea is to automatically extract problem instance representation through an instance-algorithm performance data. The derived representation in the form of latent features is utilized to determine a small yet a representative set of a given large set of instances. The proposed strategy is investigated on the Traveling Thief Problem of 9720 instances. The corresponding performance data is collected by the help of 21 TTP algorithms. The resulting computational analysis showed that the proposed method is capable of substantially minimizing the benchmark instance set size.

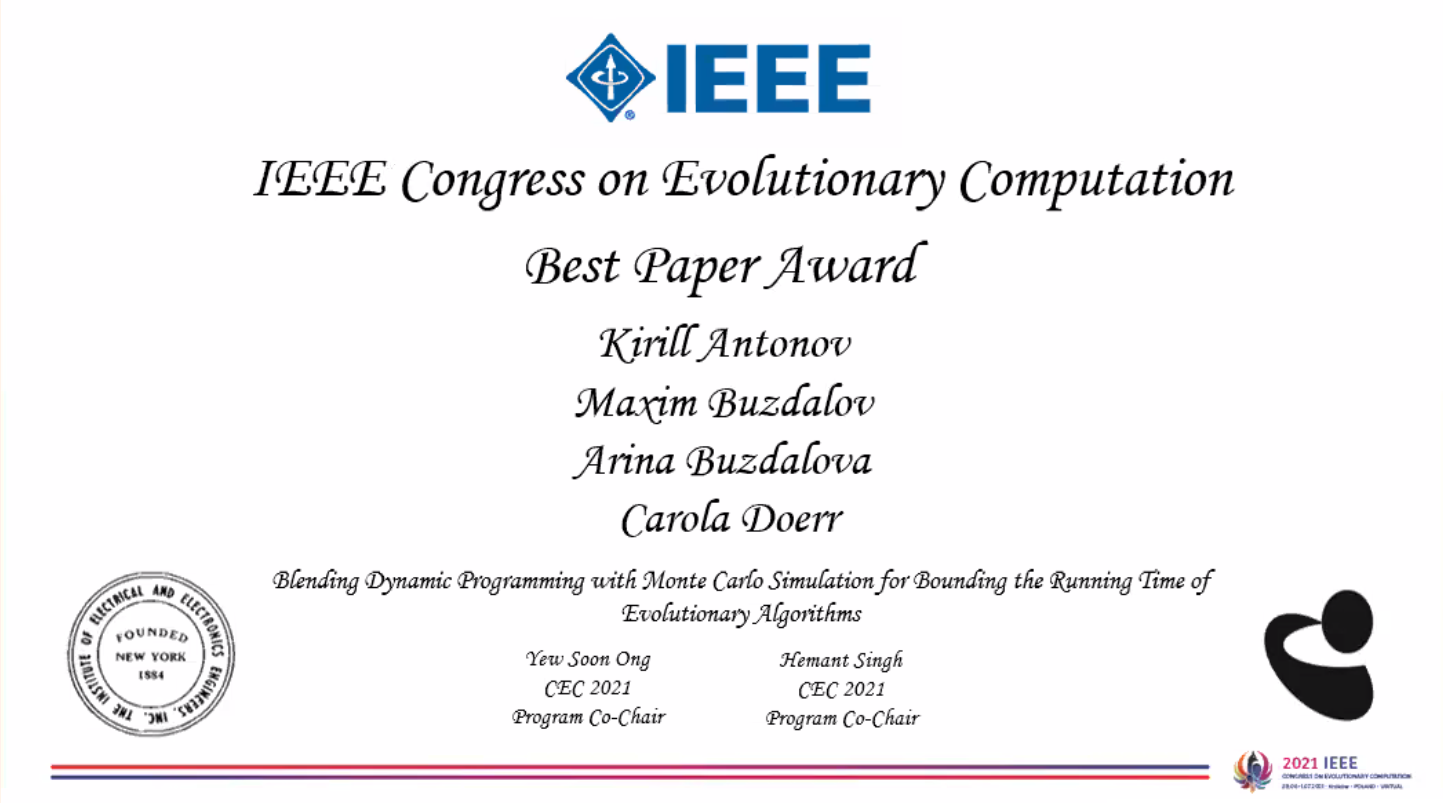

- Kirill Antonov, Maxim Buzdalov, Arina Buzdalova, and Carola Doerr. Blending Dynamic Programming with Monte Carlo Simulation for Bounding the Running Time of Evolutionary Algorithms.

slides@iao /

slides@amazon /

video@iao /

video@amazon

This paper was nominated for the best paper award of the conference – and won it!With the goal to provide absolute lower bounds for the best possible running times that can be achieved by (1+λ)-type search heuristics on common benchmark problems, we recently suggested a dynamic programming approach that computes optimal expected running times and the regret values inferred when deviating from the optimal parameter choice. Our previous work is restricted to problems for which transition probabilities between different states can be expressed by relatively simple mathematical expressions. With the goal to cover broader sets of problems, we suggest in this work an extension of the dynamic programming approach to settings in which it may be difficult or impossible to compute the transition probabilities exactly, but it is possible to approximate them numerically, up to arbitrary precision, by Monte Carlo sampling. We apply our hybrid Monte Carlo dynamic programming approach to a concatenated jump function and demonstrate how the obtained bounds can be used to gain a deeper understanding into parameter control schemes.

- Peng Wang and Guosong Yang. Using Double Well Function as a Benchmark Function for Optimization Algorithm.

slides@iao /

slides@amazon /

video@iao /

video@amazon

The double well function (DWF) is an important theoretical model originating from quantum physics and has been used to describe the energy constraint problem in quantum mechanics and structural chemistry. Although its form may vary, the DWF has two different local minima in the one-dimensional case, and the number of local minima increases as its dimension grows. As a multi-stable function, the DWF is assumed to be a potential candidate for testing the performance of the heuristic optimization algorithm, which aims to seek the global minimum. To verify this idea, a typical DWF is employed in this paper, and a mathematical analysis is presented herein, and its properties as a benchmark function are discussed in different cases. In addition, we conducted a set of experiments utilizing a few optimization algorithms, such as the multi-scale quantum harmonic oscillator algorithm and covariance matrix adaptation evolution strategy; thus the analysis has been illustrated by the results of the numerical experiment. Moreover, to guide the design of an ideal DWF used as a benchmark function in experiment, different values of the decisive parameters were tested corresponding to our analysis, and some useful rules were given based on the discussion of the results.

Important Dates

| Paper Submission Deadline: | 21 | February | 2021 |

| Notification of Acceptance: | 6 | April | 2021 |

| Camera-Ready Copy Due: | 23 | April | 2021 |

| Author Registration: | 23 | April | 2021 |

| Conference Presentation: | 30 | June | 2021 |

More information can be found in the Important Dates section of the CEC 2021 website.

Instructions for Authors

All papers submitted to our special session must follow the CEC 2021 submission guidelines.

Each paper must be in PDF format and should have 6 to 8 pages, including figures, tables, and references. The style files for IEEE Conference Proceedings – U.S. letter size – should be used as templates for your submission (LaTeX or Word).

All papers must be submitted through the IEEE CEC 2021 online submission system (https://ieee-cis.org/conferences/cec2021/upload.php).

Special session papers are treated the same as regular conference papers. Make sure to specify that your paper is for "SS-18. Benchmarking of Computational Intelligence Algorithms (BOCIA)".

In order to participate in this special session, full or student registration of CEC 2021 is needed. All papers accepted and presented at CEC 2021 will be included in the conference proceedings published by IEEE Explore.

Chairs

- Aleš Zamuda, University of Maribor, Slovenia

- Thomas Weise, Institute of Applied Optimization, Hefei University, Hefei, China

- Markus Wagner, University of Adelaide, Adelaide, SA, Australia

International Program Committee

- Abhishek Awasthi, Fujitsu, München (Munich), Germany

- Thomas Bäck, Leiden University, Leiden, The Netherlands

- Thomas Bartz-Beielstein, Technical University of Cologne, Köln (Cologne), Germany

- Andreas Beham, FH Upper Austria, Hagenberg, Austria

- Jakob Bossek, The University of Adelaide, Adelaide, Australia

- Maxim Buzdalov, ITMO University, St. Petersburg, Russia

- Josu Ceberio Uribe, University of the Basque Country, Bilbao, Spain

- Wenxiang Chen, LinkedIn, Sunnyvale, CA, USA

- Yan Chen, Institute of Applied Optimization, Hefei University, Hefei, China

- Marco Chiarandini, University of Southern Denmark, Odense M, Denmark

- Francisco Chicano, University of Málaga, Málaga, Spain

- Raymond Chiong, The University of Newcastle, Callaghan, Australia

- Carola Doerr, CNRS and Sorbonne University, Paris, France

- Johann Dreo, Thales Research & Technology, Palaiseau, France

- Tome Eftimov, Jožef Stefan Institute, Ljubljana, Slovenia

- Mohamed El Yafrani, Aalborg University, Aalborg Ø, Denmark

- Marcus Gallagher, University of Queensland, Brisbane, Australia

- Pascal Kerschke, Westfälische Wilhelms-Universität Münster, Münster, Germany

- Anna V. Kononova, LIACS, Leiden University, Leiden, The Netherlands

- Algirdas Lančinkas, Vilnius University, Lithuania

- Jörg Lässig, University of Applied Sciences Zittau/Görlitz, Görlitz, Germany

- Bin Li, University of Science and Technology of China, Hefei, China

- Jinlong Li, University of Science and Technology of China, Hefei, China

- Pu Li, Technische Universität Ilmenau, Ilmenau, Germany

- Jing Liang, Zhengzhou University, Zhengzhou, China

- Manuel López-Ibáñez, University of Málaga, Spain and University of Manchester, Manchester, UK

- Yi Mei, Victoria University of Wellington, Wellington, New Zealand

- Boris Naujoks, TH Köln, Cologne, Germany

- Antonio J. Nebro, University of Málaga, Málaga, Spain

- Miguel Nicolau, University College Dublin, Ireland

- Pietro S. Oliveto, University of Sheffield, UK

- Karol Opara, Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland

- Patryk Orzechowski, University of Pennsylvania, Philadelphia, PA, USA

- Mike Preuss, LIACS, Leiden University, Leiden, The Netherlands

- Danilo Sipoli Sanches, Federal University of Technology – Paraná, Cornélio Procópio, Brazil

- Kate Smith-Miles, The University of Melbourne, Melbourne, VIC, Australia

- Ponnuthurai Nagaratnam Suganthan, Nanyang Technological University, Singapore

- Heike Trautmann, Westfälische Wilhelms-Universität Münster, Münster, Germany

- Markus Ullrich, University of Applied Sciences Zittau/Görlitz, Görlitz, Germany

- Ryan J. Urbanowicz, University of Pennsylvania, Philadelphia, PA, USA

- Markus Wagner, University of Adelaide, Adelaide, SA, Australia

- Stefan Wagner, FH Upper Austria, Hagenberg, Austria

- Hao Wang, LIACS, Leiden University, Leiden, The Netherlands

- Thomas Weise, Institute of Applied Optimization, Hefei University, Hefei, China

- Carsten Witt, Technical University of Denmark, Denmark

- Borys Wróbel, Adam Mickiewicz University, Poland

- Zhize Wu, Institute of Applied Optimization, Hefei University, Hefei, China

- Furong Ye, LIACS, Leiden University, Leiden, The Netherlands

- Aleš Zamuda, University of Maribor, Slovenia

- Xingyi Zhang, Anhui University, Hefei, China

Chair Biographies

Dr. Aleš Zamuda is an Associate Professor and Senior Research Associate at University of Maribor (UM), Slovenia. He received Ph.D. (2012), M.Sc. (2008), and B.Sc. (2006) degrees in computer science from UM. He is management committee (MC) member for Slovenia at European Cooperation in Science (COST), actions CA15140 (ImAppNIO - Improving Applicability of Nature-Inspired Optimisation by Joining Theory and Practice) and IC1406 (formerly, cHiPSet - High-Performance Modelling and Simulation for Big Data Applications), and H2020 DAPHNE (Integrated Data Analysis Pipelines for Large-Scale Data Management, HPC, and Machine Learning) project General Assembly Member. He is IEEE Senior Member, IEEE Task Force on Benchmarking vicechair, IEEE Slovenia Section vicechair and IEEE Slovenia CIS chair, and member of IEEE CIS, IEEE GRSS, IEEE OES, ACM, and ACM SIGEVO. He is also vicechair for working groups on Benchmarking at ImAppNIO and DAPHNE and an editorial board member (associate editor) for Swarm and Evolutionary Computation (2019 IF=6.912). His areas of computer science applications include differential evolution, multiobjective optimization, evolutionary robotics, artificial life, and cloud computing; currently yielding h-index 20, 50 publications, and 1236 citations on Scopus. He won IEEE R8 SPC 2007 award, IEEE CEC 2009 ECiDUE, 2016 Danubuius Young Scientist Award, and 1% top reviewer at 2017 and 2018 Publons Peer Review Awards, including reviews for over 50 journals and 120 conferences.

Prof. Dr. Thomas Weise obtained the MSc in Computer Science in 2005 from the Chemnitz University of Technology and his PhD from the University of Kassel in 2009. He then joined the University of Science and Technology of China (USTC) as PostDoc and subsequently became Associate Professor at the USTC-Birmingham Joint Research Institute in Intelligent Computation and Its Applications (UBRI) at USTC. In 2016, he joined Hefei University as Full Professor to found the Institute of Applied Optimization (IAO) of the School of Artificial Intelligence and Big Data. Prof. Weise has more than a decade of experience as a full time researcher in China, having contributed significantly both to fundamental as well as applied research. He has published more than 90 scientific papers in international peer reviewed journals and conferences. His book "Global Optimization Algorithms – Theory and Application" has been cited more than 900 times. He is Member of the Editorial Board of the Applied Soft Computing Journal and has acted as reviewer, editor, or program committee member at 80 different venues.

Dr. Markus Wagner is a Senior Lecturer at the School of Computer Science, University of Adelaide, Australia. He has done his PhD studies at the Max Planck Institute for Informatics in Saarbrücken, Germany and at the University of Adelaide, Australia. His research topics range from mathematical runtime analysis of heuristic optimization algorithms and theory-guided algorithm design to applications of heuristic methods to renewable energy production, professional team cycling and software engineering. So far, he has been a program committee member 30 times, and he has written over 70 articles with over 70 different co-authors. He has chaired several education-related committees within the IEEE CIS, is Co-Chair of ACALCI 2017 and General Chair of ACALCI 2018.

Hosting Event

The 2021 IEEE Congress on Evolutionary Computation (CEC 2021), June 28-July 1, 2021 in Kraków, Poland.

IEEE CEC 2021 is a world-class conference that brings together researchers and practitioners in the field of evolutionary computation and computational intelligence from around the globe. The Congress will encompass keynote lectures, regular and special sessions, tutorials, and competitions, as well as poster presentations. In addition, participants will be treated to a series of social functions, receptions, and networking events.

IEEE CEC 2021 will be held in Kraków, Poland. A pearl amongst Polish cities, Kraków is one of the architectural treasures in the world on the UNESCO World Cultural Heritage List.

Links and News

Current Related Events

Past Related Events

- Special Issue on Benchmarking of Computational Intelligence Algorithms in the Applied Soft Computing Journal (2018-2020, Completed)

- 2020 Good Benchmarking Practices for Evolutionary Computation (BENCHMARK@PPSN'20) Workshop

- 2020 Good Benchmarking Practices for Evolutionary Computation (BENCHMARK@GECCO'20) Workshop

- 2019 Black Box Discrete Optimization Benchmarking (BB-DOB'19) Workshop

- 2019 Special Session on Benchmarking of Evolutionary Algorithms for Discrete Optimization (BEADO'19)

- 2018 Black-Box Discrete Optimization Benchmarking (BB-DOB@PPSN'18) Workshop

- 2018 Black-Box Discrete Optimization Benchmarking (BB-DOB@GECCO'18) Workshop

- 2018 International Workshop on Benchmarking of Computational Intelligence Algorithms (BOCIA'18)