at the Genetic and Evolutionary Computation Conference (GECCO 2019)

July 13-17, 2019, Prague, Czech Republic

http://iao.hfuu.edu.cn/bbdob-gecco19

The Black-Box Discrete Optimization Benchmarking (BB-DOB) Workshop, a part of the Genetic and Evolutionary Computation Conference (GECCO) 2019, is cordially inviting the submission of original and unpublished research papers.

The Black-Box-Optimization Benchmarking (BBOB) methodology associated to the BBOB-GECCO workshops has become a well-established standard for benchmarking stochastic and deterministic continuous optimization algorithms. The aim of the BB-DOB workshop series is to set up a process that will allow to achieve a similar standard methodology for the benchmarking of black box optimization algorithms in discrete and combinatorial search spaces.

Here you can download the BBDOB Workshop Call for Papers (CfP) in PDF format and here as plain text file.

The long term aim of our workshop series is to produce, for the domain of discrete optimization:

- a well-motivated benchmark function testbed,

- an experimental set-up,

- methods for the generation of data output for post-processing and

- proper presentations of the results in graphs and tables.

The aims of this GECCO 2019 BB-DOB workshop are to finalize the benchmarking testbed for discrete optimization and to promote a discussion of which performance measures should be used.

The benchmark functions should capture the difficulties of combinatorial optimization problems in practice. They also should be comprehensible so that algorithm behaviors can be understood or interpreted according to the performance on a given benchmark problem. The goal is that a desired search behavior can be pictured and algorithm deficiencies can be understood in depth. This understanding will lead to the design of improved algorithms. Ideally, we would like the benchmark functions to be scalable with the problem size and non-trivial in the black box optimization sense (the function may be shifted such that the global optimum may be any point). Achieving this goal would help greatly in bridging the gap between theoreticians and experimentalists.

We also wish to investigate which measures should be used to compare algorithm performance, which statistical tests should be run to compare algorithms, and how to deal with unsuccessful runs.

This workshop wants to bring together experts on benchmarking of optimization algorithms. It will provide a common forum for discussions and exchange of opinions. Interested participants are encouraged to submit a paper related to black-box optimization benchmarking of discrete optimizers. The topics of interesting especially include papers that

- suggest functions to be included in the benchmark and motivate the reasons for inclusion,

- suggest benchmark function properties that allow to capture difficulties which occur in real-world applications (e.g., deception, separability, etc.),

- suggest which classes of standard combinatorial optimization problems should be included and how to select significant instances,

- suggest which classes of toy problems should be included and motivate why,

- suggest which performance measures should be used to analyze and compare algorithms and comment/suggestions on related issues, and/or

- tackle any other aspect of benchmarking methodology for discrete optimizers such as design of experiments, presentation methods, benchmarking frameworks, etc.

- conduct performance comparisons, landscape analysis, discussion of selected benchmark problems and/or provided statistics of IOHprofiler, a ready-to-use software for the empirical analysis of iterative optimization heuristics

For more information please contact Pietro S. Oliveto at

This workshop is organized as part of ImAppNIO (Cost Action 15140).

Important Dates

| Paper Submission Opening: | 27 | February | 2019 |

| Paper Submission Deadline: | 10 | April | 2019 (extended) |

| Decisions Due: | 17 | April | 2019 |

| Camera-Ready Material Due: | 24 | April | 2019 |

| Author Registration Deadline: | 24 | April | 2019 |

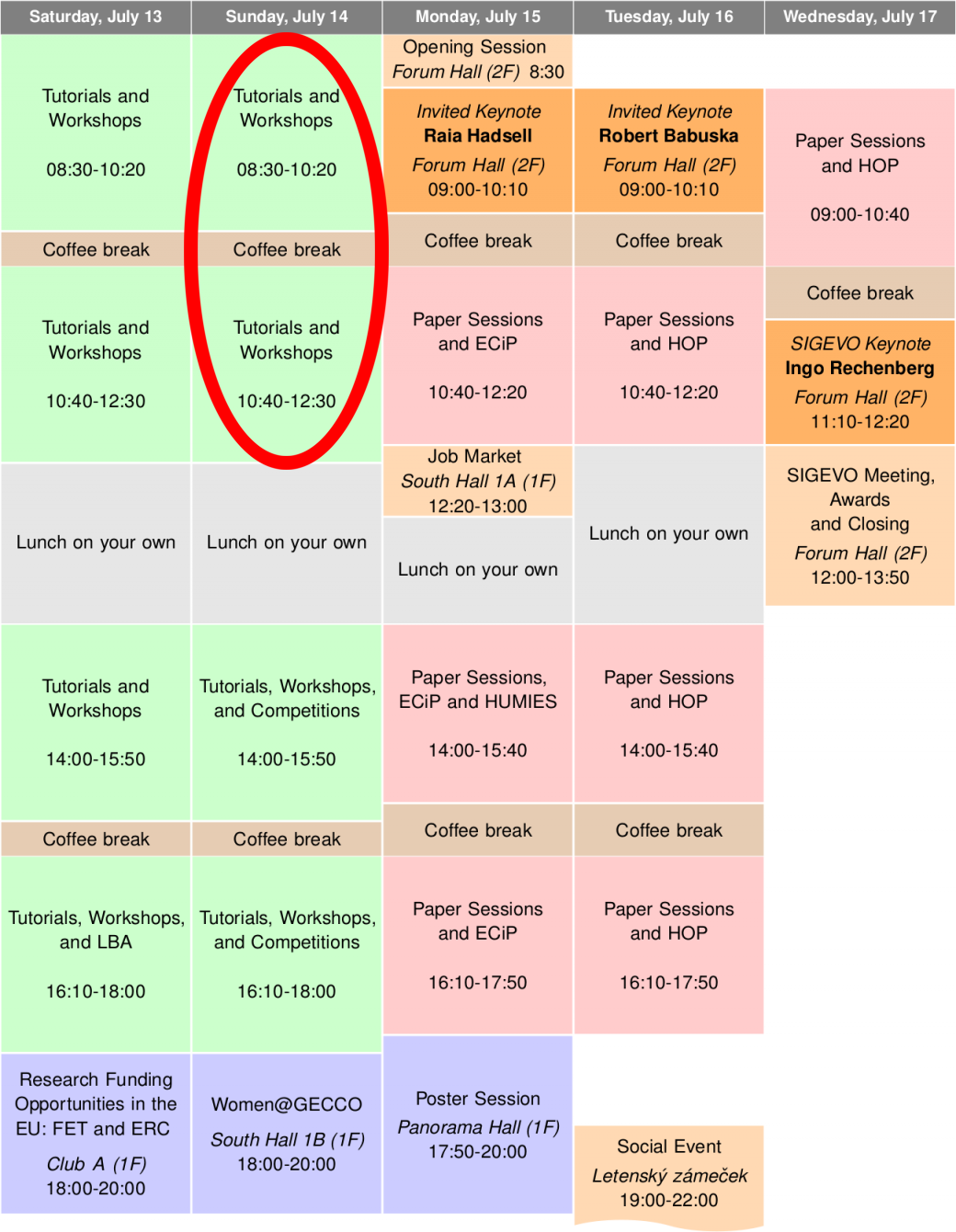

| Conference Presentation: | 14 | July | 2019 |

Program

Our workshop had two sessions on Sunday, July 14, 2019 in room Club A. We had a great participation and there were many interesting talks and discussions.

Session 1: Sunday, July 14, 2019, 08:30-10:20, room Club A

- 08:30-08:40: Pietro S. Oliveto. Introduction to the workshop.

- 08:40-09:05: Ivan Ignashov, Arina Buzdalova, Maxim Buzdalov, and Carola Doerr. Illustrating the Trade-Off between Time, Quality, and Success Probability in Heuristic Search.

Benchmarking aims to investigate the performance of one or several algorithms for a set of reference problems by empirical means. An important motivation for benchmarking is the generation of insight that can be leveraged for designing more efficient solvers, for selecting a best algorithm, and/or for choosing a suitable instantiation of a parametrized algorithm. An important component of benchmarking is its design of experiment (DoE), which comprises the selection of the problems, the algorithms, the computational budget, etc., but also the performance indicators by which the data is evaluated. The DoE very strongly depends on the question that the user aims to answer. Flexible benchmarking environments that can easily adopt to users’ needs are therefore in high demand. With the objective to provide such a flexible benchmarking environment, the recently released IOHprofiler not only allows the user to choose the sets of benchmark problems and reference algorithms, but provides in addition a highly interactive, versatile performance evaluation. However, it still lacks a few important performance indicators that are relevant to practitioners and/or theoreticians. In this discussion paper we focus on one particular aspect, the probability that a considered algorithm reaches a certain target value within a given time budget. We thereby suggest to extend the classically regarded fixed-target and fixed-budget analyses by a fixed-probability measure. Fixed-probability curves are estimated using Pareto layers of (target, budget) pairs that can be realized by the algorithm with the required certainty. We also provide a first implementation towards a fixed-probability module within the IOHprofiler environment.

- 09:05-09:30: Borja Calvo, Ofer M. Shir, Josu Ceberio, Carola Doerr, Hao Wang, Thomas Bäck, and Jose A. Lozano. Bayesian Performance Analysis for Black-Box Optimization Benchmarking.

The most commonly used statistics in Evolutionary Computation (EC) are of the Wilcoxon-Mann-Whitney-test type, in its either paired or non-paired version. However, using such statistics for drawing performance comparisons has several known drawbacks. At the same time, Bayesian inference for performance analysis is an emerging statistical tool, which has the potential to become a promising complement to the statistical perspectives offered by the aforementioned p-value type test. This work exhibits the practical use of Bayesian inference in a typical EC setting, where several algorithms are to be compared with respect to various performance indicators. Explicitly, we examine performance data of 11 evolutionary algorithms (EAs) over a set of 23 discrete optimization problems in several dimensions. Using this data, and following a brief introduction to the relevant Bayesian inference practice, we demonstrate how to draw the algorithms’ probabilities of winning. Apart from fixed-target and fixed-budget results for the individual problems, we also provide an illustrative example per groups of problems. We elaborate on the computational steps, explain the associated uncertainties, and articulate considerations such as the prior distribution and the sample sizing. We also present as a reference the classical p-value tests.

- 09:30-09:55: Maxim Buzdalov. Towards Better Estimation of Statistical Significance when Comparing Evolutionary Algorithms.

The use of well-established statistical testing procedures to compare the performance of evolutionary algorithms often yields pessimistic results. This requires increasing the number of independent samples, and thus the computation time, in order to get results with the necessary precision. We aim at improving this situation by developing statistical tests that are good in answering typical questions coming from benchmarking of evolutionary algorithms. Our first step, presented in this paper, is a procedure that determines whether the performance distributions of two given algorithms are identical for each of the benchmarks. Our experimental study shows that this procedure is able to spot very small differences in the performance of algorithms while requiring computational budgets which are by an order of magnitude smaller (e.g. 15x) compared to the existing approaches.

- 09:55-10:20: Aleš Zamuda. Binary 100-Digit Challenge using IEEE-754 Coded Numerical Optimization Scenarios (100b-Digit) and V-shape Binary Distance-based Success History Differential Evolution (DISHv).

This paper proposes a new discrete optimization benchmark 100b- Digit, a binary discretized version for the 100-Digit Challenge. The continuous version 100-Digit Challenge utilizing continuous input parameters for a fitness function was suggested for the competitions at 2019 GECCO and 2019 CEC, while this paper proposes an extension, a discrete version of the 100-Digit Challenge, discretizing using an IEEE-754 double precision floating-point representation for the search space variables. Furthermore, the paper also presents a viable recent state-of-theart algorithm discretization to be applicable on the new 100b-Digit benchmark. V-shape transfer function is applied for binarization of the variables from the Distance-based Success History Differential Evolution (DISH) to create the new DISHv algorithm, which is then run on the 100b-Digit benchmark. The preliminary results for the DISHv algorithm are then reported for a basic value-to-reach termination criterion over 100b-Digit functions. This combination of 100b-Digit benchmark and DISHv algorithm demonstrates how to bridge a gap between recent continuous optimizers and different discrete benchmarks, and vice-versa.

Session 2: Sunday, July 14, 2019 10:40-12:30, room Club A

- 10:40-11:05: Markus Wagner. Kinder Surprise's Debut in Discrete Optimisation — A Real-World Toy Problem that can be Subadditive.

Kinder SurpriseTM are chocolate eggs that house a small toy. Fans of the toys across the world are collecting and trading these toys, with some toys being very rare and highly priced. With this paper, we present and publish a data set that was extracted from the German Kinder Surprise pricing guide for 2019. The data set contains prices and photos of 187 sets of figurines produced between 1979 and 2018. In total 2366 items are included. We also provide a few first ideas for using this data as a benchmark data set for discrete optimisation, i.e., for subadditive functions with matroid constraints, for combinatorial auctions, and for functions with occasionally violated conditions.

- 11:05-11:30: Carola Doerr, Furong Ye, Naama Horesh, Hao Wang, Ofer M. Shir, and Thomas Bäck. Benchmarking Discrete Optimization Heuristics with IOHprofiler.

Automated benchmarking environments aim to support researchers in understanding how different algorithms perform on different types of optimization problems. Such comparisons carry the potential to provide insights into the strengths and weaknesses of different approaches, which can be leveraged into designing new algorithms. Carefully selected benchmark problems are also needed as training sets in the context of algorithm selection and configuration. With the ultimate goal to create a meaningful benchmark set for iterative optimization heuristics, we compile and assess in this work a selection of discrete optimization problems that subscribe to different types of fitness landscapes. All problems have been implemented and tested within IOHprofiler, our recently released software built to assess iterative heuristics solving combinatorial optimization problems. For each selected problem we compare performances of eleven different heuristics. Apart from fixed-target and fixed-budget results for the individual problems, we also derive ECDF results for groups of problems. To obtain these, we have implemented an add-on for IOHprofiler, which allows aggregation of performance data across different benchmark functions.

- 11:30-12:30: Panel Discussion on Benchmarking. Members:

- Thomas Bäck, Leiden University, Leiden, The Netherlands

- Johann Dreo, Thales Research & Technology, Palaiseau, France

- Jose A. Lozano, University of the Basque Country, Bilbao, Spain

- Boris Naujoks, TH Köln, Cologne, Germany

- Olivier Teytaud, Facebook Artificial Intelligence lab, Paris, France

- Vanessa Volz, Game AI Research Group, Queen Mary University of London, London, UK

- Markus Wagner, University of Adelaide, Adelaide, SA, Australia

- Moderator: Carola Doerr, Sorbonne University, Paris, France

Photos

Chairs

- Carola Doerr, Sorbonne University, Paris, France

- Pietro S. Oliveto, University of Sheffield, UK

- Thomas Weise, Institute of Applied Optimization, Hefei University, Hefei, China

- Borys Wróbel, Adam Mickiewicz University, Poland

- Aleš Zamuda, University of Maribor, Slovenia

International Program Committee

- Abhishek Awasthi, University of Applied Sciences Zittau/Görlitz, Görlitz, Germany

- Thomas Bäck, Leiden University, Leiden, The Netherlands

- Thomas Bartz-Beielstein, Technical University of Cologne, Köln (Cologne), Germany

- Andreas Beham, FH Upper Austria, Hagenberg, Austria

- Maxim Buzdalov, ITMO University, St. Petersburg, Russia

- Arina Buzdalova, ITMO University, St. Petersburg, Russia

- Josu Ceberio Uribe, University of the Basque Country, Bilbao, Spain

- Francisco Chicano, University of Málaga, Málaga, Spain

- Carola Doerr, Sorbonne University, Paris, France

- Johann Dreo, Thales Research & Technology, Palaiseau, France

- Tome Eftimov, Biomedical Data Science, Stanford University, Stanford, California, USA

- Aniko Ekart, Aston University, Birmingham, UK

- Thomas Jansen, Aberystwyth University, UK

- Pascal Kerschke, Westfälische Wilhelms-Universität Münster, Münster, Germany

- Algirdas Lančinkas, Vilnius University, Lithuania

- Johannes Lengler, ETH Zürich, Zürich, Switzerland

- Bin Li, University of Science and Technology of China, Hefei, China

- Jinlong Li, University of Science and Technology of China, Hefei, China

- Xinlu Li, Institute of Applied Optimization, Hefei University, Hefei, China

- Arnaud Liefooghe, Université de Lille Sciences et Technologies, Lille, France

- Miguel Nicolau, University College Dublin, Ireland

- Frank Neumann, University of Adelaide, Adelaide, SA, Australia

- Pietro S. Oliveto, University of Sheffield, UK

- Karol Opara, Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland

- Chao Qian, University of Science and Technology of China, Hefei, China

- Mohammad Ali Raayatpanah, Kharazmi University, Tehran, Iran

- Roman Senkerik, Tomas Bata University, Zlin, Czech Republic

- Dirk Sudholt, University of Sheffield, UK

- Heike Trautmann, Westfälische Wilhelms-Universität Münster, Münster, Germany

- Markus Ullrich, University of Applied Sciences Zittau/Görlitz, Görlitz, Germany

- Markus Wagner, University of Adelaide, Adelaide, SA, Australia

- Stefan Wagner, FH Upper Austria, Hagenberg, Austria

- Hao Wang, Leiden University, Leiden, The Netherlands

- Thomas Weise, Institute of Applied Optimization, Hefei University, Hefei, China

- Carsten Witt, Technical University of Denmark, Denmark

- Borys Wróbel, Adam Mickiewicz University, Poland

- Yuezhong Wu, University of New South Wales (UNSW), Sydney, Australia

- Zhize Wu, Institute of Applied Optimization, Hefei University, Hefei, China

- Aleš Zamuda, University of Maribor, Slovenia

- Christine Zarges, Aberystwyth University, Aberystwyth, Wales, United Kingdom

- Xingyi Zhang, Anhui University, Hefei, China

Chair Biographies

Carola Doerr is a permanent CNRS researcher at Sorbonne University in Paris, France. She studied Mathematics at Kiel University (Germany, Diplom, 2007) and Computer Science at the Max Planck Institute for Informatics and Saarland University (Germany, PhD, 2011). Before joining the CNRS she was a post-doc at Paris Diderot University (Paris 7) and the Max Planck Institute for Informatics. From 2007 to 2009, she worked as a business consultant for McKinsey & Company, where her interest in evolutionary algorithms (EAs) originates from. Her main research activities are in the mathematical analysis of randomized algorithms, with a strong focus on EAs and other black-box optimizers. She has been very active in the design and analysis of black-box complexity models, a theory-guided approach to explore the limitations of heuristic search algorithms. Most recently, she has used knowledge from these studies to prove superiority of dynamic parameter choices in evolutionary computation, a topic that she believes to carry huge unexplored potential for the community. Carola Doerr has received several awards for her work on evolutionary computation, among them the Otto Hahn Medal of the Max Planck Society and four best paper awards at GECCO. She is chairing the program committee of FOGA 2019 and previously chaired the theory tracks of GECCO 2015 and 2017. She is editor of two special issues in Algorithmica and vice chair of the EU-funded COST action 15140 on "Improving Applicability of Nature-Inspired Optimisation by Joining Theory and Practice (ImAppNIO)".

Pietro S. Oliveto is a Senior Lecturer and an EPSRC Early Career Fellow at the University of Sheffield,UK. He re-ceived the Laurea degree in computer science from the University of Catania, Italy in 2005 and the PhD degree from the University of Birmingham,UK in 2009. He has been EPSRC PhD+ Fellow (2009-2010) and EPSRC Postdoctoral Fellow (2010-2013) at Birmingham and Vice-Chancellor's Fellow at Sheffield (2013-2016). His main research interest is the performance analysis of bio-inspired computation techniques including evolutionary algorithms, genetic programming, artificial immune systems and hyperheuristics. He has won best paper awards at GECCO 2008, ICARIS 2011 and GECCO 2014. He is part of the Steering Committee of the annual workshop on Theory of Randomized Search Heuristics (ThRaSH), Associate Editor of the IEEE Transactions on Evolution-ary Computation, Chair of the IEEE CIS Technical Committee on Evolutionary Computation, Leader of the ImAppNIO Cost Action Working Group on Benchmarking and member of the EPSRC Peer Review College. Dr. Oliveto has given tutorials on the runtime complexity analysis of EAs regularly at CEC, GECCO, WCCI, SSCI and PPSN since 2012.

Thomas Weise obtained the MSc in Computer Science in 2005 from the Chemnitz University of Technology and his PhD from the University of Kassel in 2009. He then joined the University of Science and Technology of China (USTC) as PostDoc and subsequently became Associate Professor at the USTC-Birmingham Joint Research Institute in Intelligent Computation and Its Applications (UBRI) at USTC. In 2016, he joined Hefei University as Full Professor to found the Institute of Applied Optimization at the Faculty of Computer Science and Technology. Prof. Weise has more than seven years of experience as a full time researcher in China, having contributed significantly both to fundamental as well as applied research. He has more than 80 scientific publications in international peer reviewed journals and conferences. His book "Global Optimization Algorithms – Theory and Application" has been cited more than 730 times. He has acted as reviewer, editor, or programme committee member at 70 different venues.

Borys Wróbel works at the intersection between biology and computer science, and his current research interests include computational properties of biologically-inspired models of computation (artificial gene regulatory networks and spiking neural networks), which involves building artificial life software platforms that use high-performance computing and neuromorphic hardware. Borys Wróbel received his PhD at the University of Gdańsk (Poland) in 1998, was a Fulbright Visiting Researcher in the Salk Institute for Biological Studies in San Diego, CA, and later FEBS and EMBO Fellow at the Hebrew University of Jerusalem (Israel), Marie Curie Postdoctoral Fellow at the University of Valencia, and Sciex Fellow in the Insitute of Neuroinformatics at the University of Zurich and ETHZ (Switzerland). He is now a professor at the Adam Mickiewicz University in Poznań, Poland. He is one of the vice-chairs of the ImAppNIO working group on Benchmarking.

Aleš Zamuda is an Assistant Professor and Researcher at University of Maribor (UM), Slovenia. He received Ph.D. (2012), M.Sc. (2008), and B.Sc. (2006) degrees in computer science from UM. He is management committee (MC) member for Slovenia at European Cooperation in Science (COST), actions CA15140 (ImAppNIO - Improving Applicability of Nature-Inspired Optimisation by Joining Theory and Practice) and IC1406 (cHiPSet - High-Performance Modelling and Simulation for Big Data Applications). He is IEEE Senior Member, IEEE Slovenia Section Vice Chairman and Young Professionals Chairman, IEEE CIS member, ACM SIGEVO member, ImAppNIO Benchmarks working group vice-chair, and editorial board member (associate editor) for Swarm and Evolutionary Computation (2017 IF=3.818). His areas of computer science applications include ecosystems, evolutionary algorithms, multicriterion optimization, artificial life, and computer animation; currently yielding h-index 18, 41 publications, and 883 citations on Scopus. He won IEEE R8 SPC 2007 award, IEEE CEC 2009 ECiDUE, 2016 Danubuius Young Scientist Award, and 1% top reviewer at 2017 and 2018 Publons Peer Review Awards, including reviews for over 40 journals and 85 conferences.

Instructions for Authors

Instructions regarding how to submit papers and the paper format are given at https://gecco-2019.sigevo.org/index.html/tiki-index.php?page=Workshops. Some of the things to consider are:

- Submitted papers must be ANONYMIZED, i.e., cannot contain any element that may reveal the identity of their authors, in order to facilitate a double-blind review process.

- The maximum paper length is eight pages.

- The maximum number of words in the abstract is 200.

- In the GECCO submission page, select "Workshop Paper" and in the field "Workshop" of the next form, select "Workshop Black Box Discrete Optimization Benchmarking".

- At least one author from each accepted paper must register at the conference by April 24, 2019, pay the conference fee, and be present at the conference to give an oral presentation.

For more information please contact Pietro S. Oliveto at

Hosting Event

The Genetic and Evolutionary Computation Conference (GECCO 2019)

July 13-17, 2019, Prague, Czech Republic

http://gecco-2019.sigevo.org

The Genetic and Evolutionary Computation Conference (GECCO 2019) will present the latest high-quality results in genetic and evolutionary computation. Topics include genetic algorithms, genetic programming, evolution strategies, evolutionary programming, memetic algorithms, hyper-heuristics, real-world applications, evolutionary machine learning, evolvable hardware, artificial life, adaptive behavior, ant colony optimization, swarm intelligence, biological applications, evolutionary robotics, coevolution, artificial immune systems, and more. The full list of tracks is available at: https://gecco-2019.sigevo.org/index.html/Program+Tracks.

The GECCO 2019 Program Committee invites the submission of technical papers describing your best work in genetic and evolutionary computation. Full papers of at most 8 pages (excluding references) should present original work that meets the high-quality standards of GECCO. Accepted full papers appear in the ACM digital library as part of the Main Proceedings of GECCO. For full papers, a separate abstract needs to be submitted first by January 30, 2019. Full papers are due by the non-extensible deadline of February 6, 2019.

Each paper submitted to GECCO will be rigorously evaluated in a double-blind review process. Evaluation is done on a per-track basis, ensuring high interest and high expertise of the reviewers. Review criteria include the significance of the work, technical soundness, novelty, clarity, writing quality, relevance and, if applicable, sufficiency of information to permit replication.

Besides full papers, poster-only papers of at most 2 pages may be submitted. Poster-only papers should present original work that has not yet reached the maturity and completeness of research results that are published as full papers at GECCO. The review of poster-only papers follows the same double-blind process described above. Accepted poster-only papers will appear in the ACM digital library as part of the Companion Proceedings of GECCO. Poster-only papers are due by the non-extensible deadline of February 6, 2019, and no abstract needs to be submitted first.

By submitting a paper, the author(s) agree that, if their paper is accepted, they will:

- Submit a final, revised, camera-ready version to the publisher on or before the camera-ready deadline

- Register at least one author to attend the conference on April 17, 2019

- Attend the conference (at least one author)

- Present the accepted paper at the conference

Each paper accepted needs to have at least one author registered. If an author is presenting more than one paper at the conference, she/he does not pay any additional registration fees.

News

- tweet by Johann Dreo @j_dreo, 14 Jul 2019

- tweet by Carlos Cotta @ccottap, 14 Jul 2019

- tweet by Rigorous Research, @RigResearch, 14 July 2019

- tweet by Rigorous Research, @RigResearch, 13 July 2019

- tweet by Rigorous Research, @RigResearch, 13 July 2019

- tweet by Rigorous Research, @RigResearch, 13 July 2019

- tweet by Aleš Zamuda, @aleszamuda, 13 July 2019

- tweet by Rigorous Research, @RigResearch, 23 March 2019

- tweet by Aleš Zamuda, @aleszamuda

- tweet by Aleš Zamuda, @aleszamuda

- tweet by Aleš Zamuda, @aleszamuda

- tweet by Aleš Zamuda, @aleszamuda

- BB-DOB@GECCO 2019: Program Released

Related Events

- Special Session on Benchmarking of Computational Intelligence Algorithms (BOCIA'21)

- Special Issue on Benchmarking of Computational Intelligence Algorithms in the Applied Soft Computing Journal

- Special Session on Benchmarking of Evolutionary Algorithms for Discrete Optimization (BEADO)

- in the past: Black-Box Discrete Optimization Benchmarking (BB-DOB@PPSN) Workshop

- in the past: Black-Box Discrete Optimization Benchmarking (BB-DOB@GECCO) Workshop

- in the past: International Workshop on Benchmarking of Computational Intelligence Algorithms (BOCIA)